An Algorithm to Read Your Mind

Problem

Museum curators have a very hard job. They not only have to predict the pieces of art they think most people will find interesting, but they have to set up the museum in a way where each visitor has the best experience possible. This can be very hard to do as people have extremely different preferences. The curator can’t possible know which pieces most people are actually going to find the most interesting. However, this is not the only problem at museums. A lot of people walk into a museum and don’t have a particular goal. They wander around and may see pieces they like. They will probably spend a longer time analyzing pieces as they are intrigued by their shapes, colors, histories, etc. However, what if the person wants to look at more pieces similar to this one? The problem lies in that it’s hard to have a custom experience at a museum. If you find pieces you like, wouldn’t it be cool if you could be guided to more pieces that might interest you? And what if this could be done without you explicitly communicating these preferences? Imagine how exciting it would be if the museum exhibit could read your mind?

Figure 1: Fortune Tellers Use Cold-Reading to Know What Your Thinking

Source: https://travel67.files.wordpress.com/2013/05/6459726-3-se-470-new.jpg

Solution

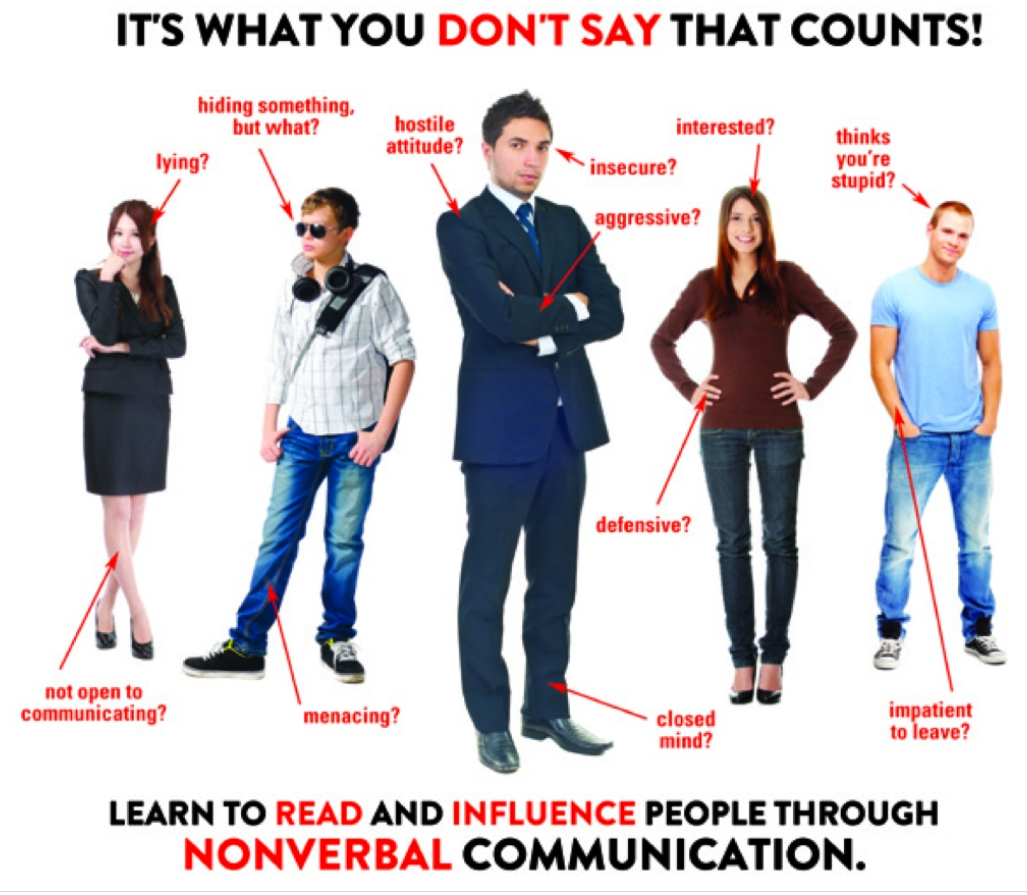

It’s not magic! Humans have been using non-verbal communication in their bodies, their faces and in particular, their eyes, for thousands of years before they used words. Fortune tellers and magicians have used techniques of cold reading a person’s thoughts for centuries, convincing sceptics that they can actually read their minds (Figure 1). This project will focus on using these techniques to customize each visitor’s experience at the museum to their own tastes and preferences, not only directing them to pieces on display, but even helping them experience pieces that are hidden away in storage. Cameras are already placed all over the museum. Imagine if these cameras could track and analyze the eyes, face, and body language of every visitor that walks into the museum? The algorithm could track a visitor’s eyes and record how long they spent looking at each object. It could also analyze facial expressions - happiness, boredom, confusion - and develop a profile for the visitor; what they looked at most, what they avoided, and what emotions they non-verbally expressed (Figure 2) at each exhibit. We would then use artificial intelligence or machine learning algorithms to make projections of which artworks each individual would find most interesting, and guide the visitor in the direction of similar pieces. This way each person has a customized tour that can be modified along the way. There are a couple of reasons this is particularly cool. First, as a person comes into a museum more often, the program will only get better at determining the types of pieces each individual enjoys. Second, a person may not even consciously be aware about why they like a certain object. For example, they may be drawn to paintings with a certain color pattern, and the goal would be that the algorithm could pick up on this pattern and direct you to similar paintings. This would also be invaluable data to curators as they would now have information about which pieces are the most popular. They could then modify the displayed artworks, showing pieces from the collection that may not have otherwise been shown.

Figure 2: Non-Verbal Communication Tells What You Are Thinking

Source: http://www.communicatecharisma.com/ccm-content/uploads/2014/01/dont-say.jpg